‘Poverty porn’ is content (text, images, or video) that depicts people experiencing poverty, crisis, or trauma in ways that sensationalise, objectify, or solicit pity rather than empathy. ‘AI-generated poverty porn’ is a new expression of these historically harmful images and refers to the synthetic images styled to mimic documentary photos of people in poverty, crisis, or trauma. These visuals are cheap and fast to produce, widely available through stock libraries and creative platforms, and often seen as a way to sidestep consent and safeguarding.

This resource intends to offer practical guidance to NGOs as we collectively grapple with the increasing availability of AI-generated content and tools. Download it for free now.

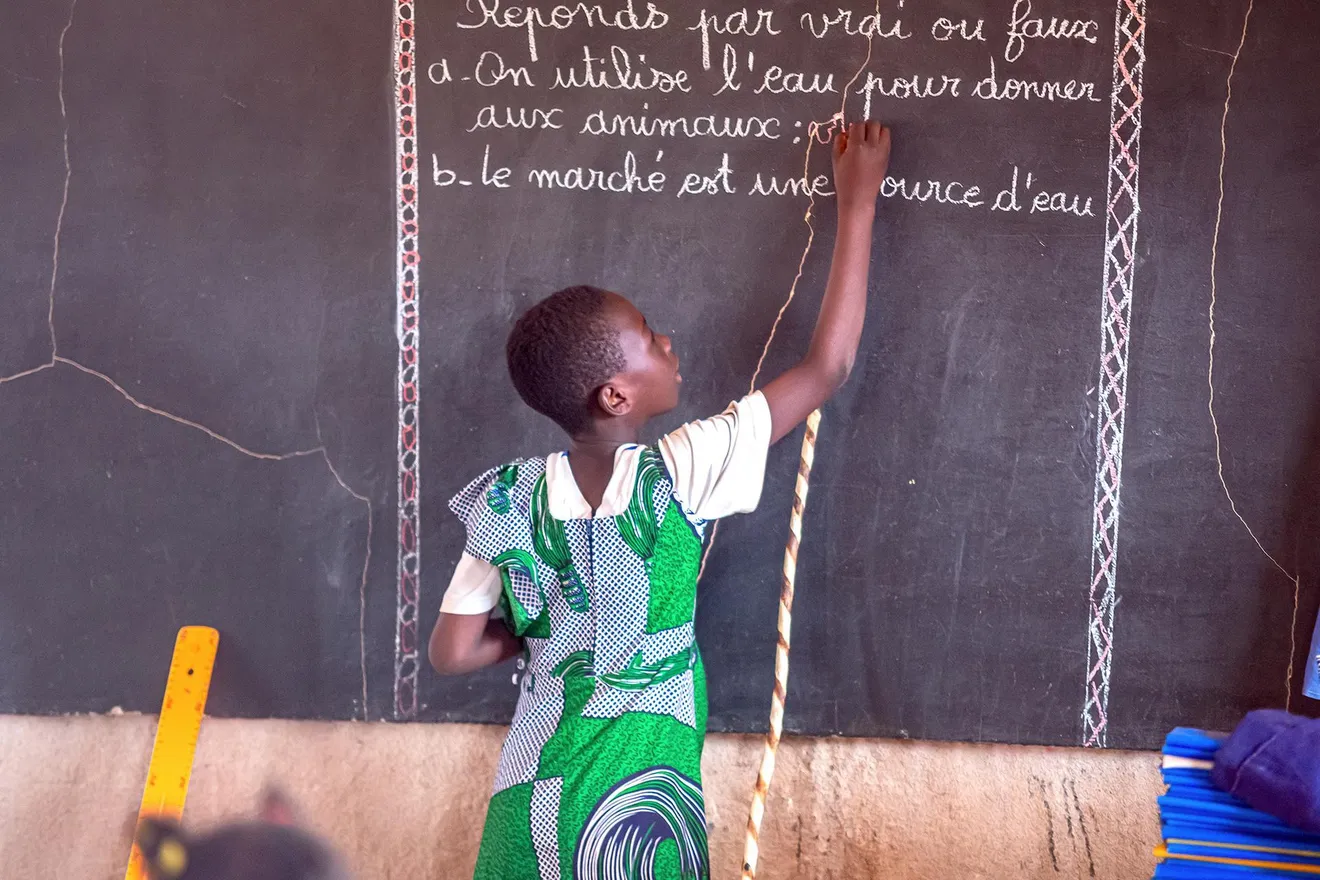

2025, Koudougou, Burkina Faso. Eleven-year-old Amandine Zongo reviews a problem at the chalkboard in her Life and Earth Sciences (SVT) lesson. Credit: Inoussa Baguian/EDM/Fairpicture

Please select the content below to start your download:

Kate Kardol

Generative AI tools now make it easy to fabricate images of poverty, crisis, or trauma that look real but aren’t. These so-called “AI-generated poverty porn” visuals sensationalise suffering, reinforce stereotypes, and mislead audiences.

Even when no real person is depicted, the harm is real: trust erodes, dignity is undermined, and entire communities are misrepresented.

Humanitarian communication is built on the principle of do no harm.

Even when no real person is depicted, synthetic “suffering” imagery distorts how audiences understand poverty and crisis, perpetuates racialised and colonial tropes, and damages credibility when discovered. The harm may be representational, operational, and long-term, but it is real.