A photograph has always been more than an image. It’s a fragment of trust, a bridge between a storyteller and a witness. But in 2025, that bridge is cracking.

Artificial intelligence can now evoke heartbreaking scenes of hunger, war, and displacement in seconds. Faces that never existed. Tears without pain. Homes without history.

For NGOs and impact-based organizations, this is both an opportunity and a trap. On one hand, AI promises efficiency, privacy protection, and creative freedom. On the other, it risks destroying the ethical foundation of storytelling, not by inventing new problems, but by repeating old ones. The biases embedded in AI systems reflect decades of colonial and stereotypical narratives in humanitarian imagery. The tool itself isn’t the enemy; it merely magnifies the patterns we’ve yet to unlearn. AI doesn’t create ethical blind spots, it exposes them. The challenge isn’t the technology, but how we choose to use it within old frameworks of power and perception.

Some organizations are experimenting with synthetic “poverty” imagery, yet even before AI, the problem was never just the tool, but the perspective behind it. Many campaigns relied on real photographs that repeated the same stories. AI doesn’t change these dynamics; it amplifies them. At Fairpicture, we actively work against these patterns, promoting storytelling built on dignity, participation, and truth.

2024, Niger, Diffa. Child protection and education in Diffa - Bibata Lawal and her brother Hamid Lawal in class activities.

Photo: Abdoul-Rafik Gaïssa Chaïbou / SOS-Kinderdorf Schweiz / Fairpicture

In today’s NGO reality, communications teams operate under crushing pressure: tighter budgets, shrinking staff, and relentless demand for content.

AI seems like a dream solution: low-cost visuals, endless variations, fast turnaround. No travel, no translators, no consent forms. Just a few prompts, a few clicks, and your next campaign is ready.

But convenience often disguises a moral shortcut.

When real communities are replaced with algorithmic composites, we stop representing people, we replicate them.

We move from engagement to imitation, and imitation, no matter how realistic, carries a hidden cost: it removes people from their own stories.

In ethical storytelling, convenience without conscience is a cost, not a gain.

Noah Arnold, Fairpicture

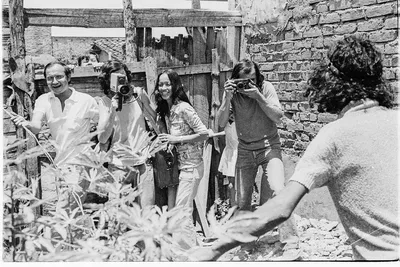

2025, La Ceiba, Honduras. Members of the El King artisanal fishermen's association

Photo: Nahún Rodríguez/Fauna&Flora/Fairpicture

AI doesn’t invent bias, it scales it.

Most generative image models are trained on scraped datasets from the internet, where images of vulnerable people are already over-represented. Poverty, crisis, and despair become statistically “normal” visual outputs.

So, when a communicator types “child in need” into a prompt, the machine doesn’t see the human nuance of poverty. It reproduces an algorithmic memory of suffering, dark skin, dirt floors, pleading eyes. It recreates the stereotypes we’ve been trying to dismantle for decades.

These images may not depict real people, but they shape real perceptions.

They preserve outdated narratives: the helpless victim, the Western savior, the faceless “other.” And because they are synthetic, no one can correct context or claim consent.

The result? Suffering without accountability.

For most of photography’s history, consent was the exception, not the rule. From colonial archives to fundraising campaigns, images of vulnerable communities were taken and used without regard for permission or power. In the West, we cared about consent when it came to our own children, not the children on distant posters.

Only in recent years has this begun to change. Ethical storytelling has emerged as a response to that history, building awareness, respect, and accountability into visual practice. Tools like Fairpicture's FairConsent mark a real step forward, a tangible progress toward fairness and transparency.

But AI now threatens to bypass these hard-won gains entirely, creating a new space where consent, context, and accountability risk disappearing once again.

AI flips that equation. When no human subject exists, who gives consent? Who approves how “they” are portrayed? Who ensures fairness when the likeness is a collage of thousands of scraped images from real people who never agreed to be part of a dataset?

The consent paradox reveals a deeper truth: technology didn’t remove the ethical burden, it multiplied it.

NGOs can’t hide behind the excuse that “no real people were harmed.” Because representation itself carries ethical weight. Every fabricated face still points toward someone real. Every stereotype reinforced still shapes public opinion.

Ethics must apply to likeness, not just to identity.

Rakai District, Uganda 2025. Farmer Nalusiba Maria Evalister (R) giving consent through the FairConsent App.

Photo: Nyokabi Kahura / Fairpicture

NGO storytelling runs on trust. Donors, journalists, and audiences believe that the visuals they see reflect lived realities.

But when synthetic imagery enters the mix, especially without disclosure, that trust begins to fracture.

Imagine a supporter discovering that a powerful campaign image wasn’t captured in the field, but generated by a prompt. The immediate reaction isn’t awe. It’s betrayal.

Transparency isn’t optional. It’s the currency of modern communication.

Organizations that fail to disclose their AI use, risk not only ethical backlash but long-term credibility damage. Audiences forgive imperfections; they don’t forgive deception.

The ethical rule of thumb is simple: if an image is not real, say so, clearly and proudly.

“Poverty porn”, the use of dehumanizing or exaggerated images of suffering to provoke donations has haunted humanitarian communication for decades.

AI threatens to give it new life.

Synthetic visuals can amplify the aesthetics of despair without the constraints of reality. A machine will never flinch from making eyes larger, tears shinier, or skin rougher if that triggers empathy.

But empathy built on exaggeration is manipulation, not connection.

The mission of ethical communication is not to shock, but to contextualize. To help audiences understand, not just feel.

If AI tools are to play a role, they must serve storytelling rooted in dignity, participation, and truth, not emotional exploitation.

Madagascar, 2025. Florence (63) and her daughter Marcelinah (24) have been living in Toamasina for 4 years and have only been using Ranontsika water for 1 month.

Photo: iAko Randrianarivelo/1001Fontaines/Fairpicture

Ethical communication isn’t about rejecting technology; it’s about guiding it with purpose. Keeping people at the centre of innovation means rethinking how we define consent, authorship, and accountability.

At Fairpicture, we see this as more than a debate — it’s a practice. Through our FairConsent system, transparent image sourcing, and collaborations with local photographers, we help organizations tell real stories with dignity and accuracy. AI may have a role to play, but it should never replace human connection or lived truth.

Consent is no longer just a signature; it’s a responsibility that extends to likeness and representation. The more we insist on fairness from technology, the closer we come to keeping humanity at the heart of every image.

Technology moves faster than ethics, but ethics decides what survives.

AI will reshape visual communication forever. That’s inevitable. What’s not inevitable is whether it reshapes it for good.

If NGOs and storytellers treat AI as a shortcut, we’ll create a future of synthetic empathy, a world that feels deeply but knows nothing.

But if we approach AI as a partner in responsibility, not manipulation, we can build a new era of storytelling, one that is transparent, participatory, and profoundly human.

The next time we prompt a machine to “show a child in need,” let’s pause and ask:

Do we want to generate emotion, or understanding?

Because the future of ethical storytelling depends on our answer.

December 2023 - Aurel Vogel

2023 was a journey of change, impact, and innovation. Aurel latest blog post on the development of Fairpicture.

Learn more about Update #10: A Year Like a Rollercoaster – 2023 was a Journey of Change, Impact, and Innovation

February 2023 - Anne Nwakalor

Anne Nwakalor explores the importance of consent within the context of the Global South – something which shouldn't be considered insignificant any longer.

Learn more about Children are Not Props: Consent in the Global South

April 2023 - Jaìr F. Coll

Misery Porn still persists today in visual storytelling, reducing people to their condition of misery and stripping them of their humanity.

Learn more about How to talk about consent and Misery Porn?